QIRX SDR

Calibrate your RTL-SDR in 15 Seconds (Seconds, not Minutes)

Part I: Method and Measurement

A Tutorial by Clem, DF9GI

In case you belong to the impatient fraction and want to check the truth behind the claimed 15 seconds immediately,

then click the link leading you directly to the

bullet "Let's measure" and follow the instructions. Don't forget to start your stopwatch!

You might even reduce the calibration time further to about one second by reading the "Remark" at the bottom of this page!

After having performed the calibration, I hope you agree that the speed of the "DAB-Method",

presented here, is unbeatable.

Sometimes it is useful to have a receiver tuned exactly (what ever that means) to the frequency commanded.

Cheap RTL-SDR dongles (as I am using some) usually don't fulfil this requirement. They have frequency errors up to

about 100ppm, sometimes even more. Ppm means "parts per million". For instance, if you commanded your receiver (100ppm accuracy) a frequency of 500MHz,

it might in reality

be tuned 50kHz off the nominal frequency you requested, because 1ppm corresponds to 500Hz.

-

Relevance

In many cases this is - for the normal user - of little relevance. Broadcast stations using the WFM (Wideband Frequency Modulation) scheme need not

very accurately be tuned for reception. At 100MHz a typical accuracy of 50ppm means 5kHz. Thus, with a bandwidth of 100kHz and

a channel spacing of 200kHz you can forget about your dongle inaccuracy.

However, there are also other signal sources of interest. Consider airband. The channels have nowadays a separation of 8.33 kHz. At 130MHz, an inaccuracy of 100ppm is 13kHz error, meaning you are inevitably tuned to a wrong channel (if you are lucky enough to live in one of those countries where airband monitoring is not a criminal offence.... For this reason, no spectrum example here).

-

rtl_test

The rtl_test program belongs to the rtl_sdr suite providing some small but very useful programs for basic applications of the RTL-SDR hardware (QIRX uses their rtl_tcp program as the server for I/Q data). I learned about the necessary -p option of rtl_test in this reddit discussion.

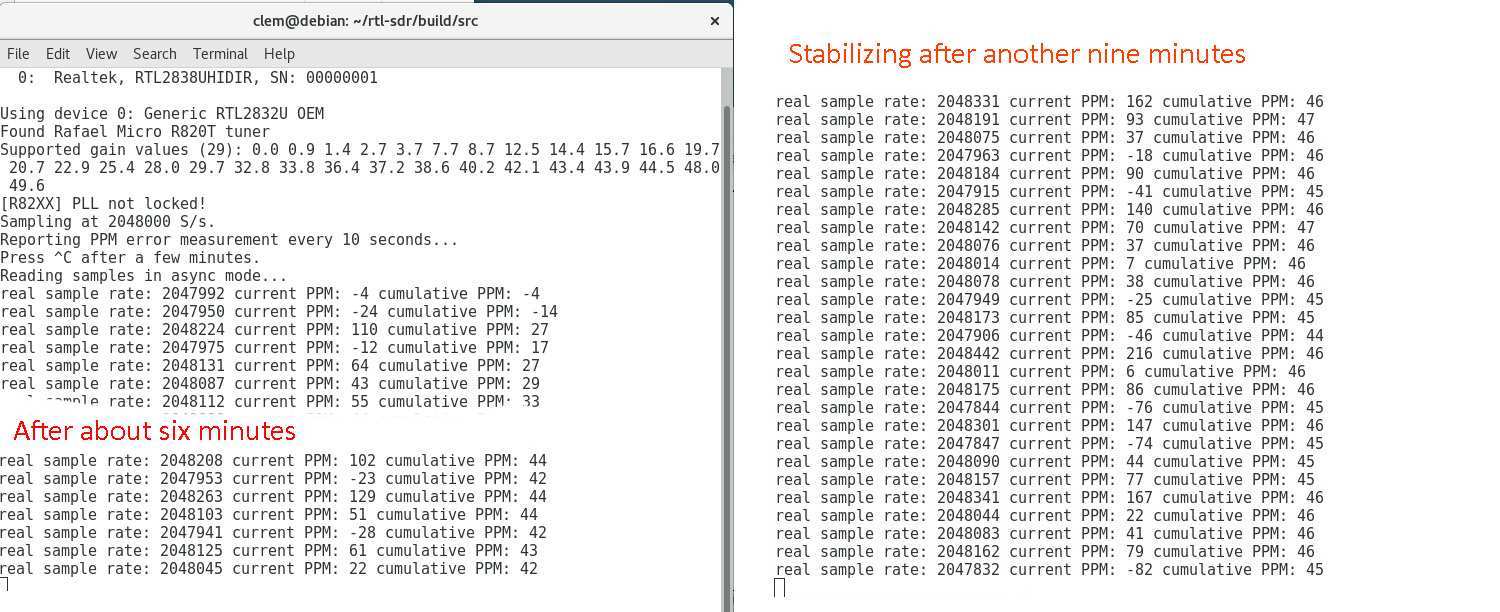

With its -p option it does the job in about 10 to 15 minutes . In my case, the windows version did not output any ppm value, but the Linux version worked. As discussed in the mentioned Reddit link, the program is using the "high-resolution timer" of the PC as the frequency reference. In my case, the output stabilized after about 12 minutes. The following two screenshots show the output after 6 and 15 minutes, respectively. The program was started in Linux with ./rtl_test -p (this line unfortunately cut off in the left picture).

Despite the large scatter of the "current PPM" values, these changes seem to average out after some minutes. -

kalibrate-rtl

This program might be found on https://cognito.me.uk/computers/kalibrate-rtl-windows-build-32-bit/. Its working principle is to look for GSM base stations and compare their well-known exact frequencies with the ones of the RTL-SDR hardware. The interesting point in this software is the use of the near 1GHz GSM frequencies. It would be interesting to compare with other methods obtained by using other reference frequencies.

Unfortunately, in my case the program did not output any ppm value. Although - with several tries - it found in its necessary first run up to three GSM base stations, the second run using any one of these stations resulted in no output. However, the author reports succesful measurements on the mentioned website. The program needs its time searching for GSM base stations, which might take several minutes. After that - according to the author - the ppm errors of the RTL-SDR hardware should be output within seconds. -

DAB as a Measurement Tool

A DAB receiver - from its working principle - must be able to find rapidly - compared with the other described methods - the central frequency of the multiplex it is to be tuned to. The difference in this respect between an "ordinary" WFM receiver and a DAB receiver is the very high accuracy of the determination of this center frequency in DAB. While the accuracy of a normal WFM receiver needs only to be in the kHz region, a DAB receiver must be able to perform this task at least a factor of 100 better. This is due to the digital nature of the broadcast signal.

Unsurprisingly, the accurate recognition of the transmitters' frequency is one of the more difficult tasks in any DAB receiver, and an important part in the synchronization procedure.

We want to use the frequency of a DAB transmitter as the reference frequency for our measurment. Thus, we first must be sure that its accuracy is sufficient for our purpose.DAB Transmitter Accuracy: The requirements in the DAB Standard with respect to the frequency accuracy of a DAB transmitter are surprisingly low. ETSI EN 302 077-1 V1.1.1 (2005-01) states in 4.2.2.3:

A deviation of 100 Hz at a frequency of 200MHz corresponds to a deviation of 0.5ppm, an astonishingly high value for a transmitter in a Single Frequency Network (SFN).The centre frequency of the RF signal shall not deviate more than 10 % of the relevant carrier spacing from its nominal value. This results in the following allowance for frequency deviation:

- transmission mode I < 100 Hz;

...

In stark contrast to this relaxed requirement is a statement found in an Application Note of Rohde&Schwarz, one of the leading manufacturers for DAB transmitters and measurement equipment. It is called "DAB Transmitter Measurements for Acceptance, Commissioning and Maintenance" and can here be found in the web. R&S states in paragraph 3.4.1 "Signal Quality"Single-frequency networks (SFN), in particular, place very stringent requirements on the frequency accuracy of a DAB transmitter of less than 10–9.

...A frequency deviation of 10–9 at 200MHz equals to 0.2Hz or 200mHz, a factor of 500(!) better than required in the standard.

Anyway, I assume here that for our purpose the accuracy of the transmitter as a frequency reference is more than sufficient.

-

Let's measure

What you need:

- QIRX or another suitable DAB receiver.

- A RTL-SDR receiver tuned to some multiplex. It must have been warmed up for at least five minutes.

- Pocket calculator

- Room thermometer

- Sticker for your RTL-SDR dongle.

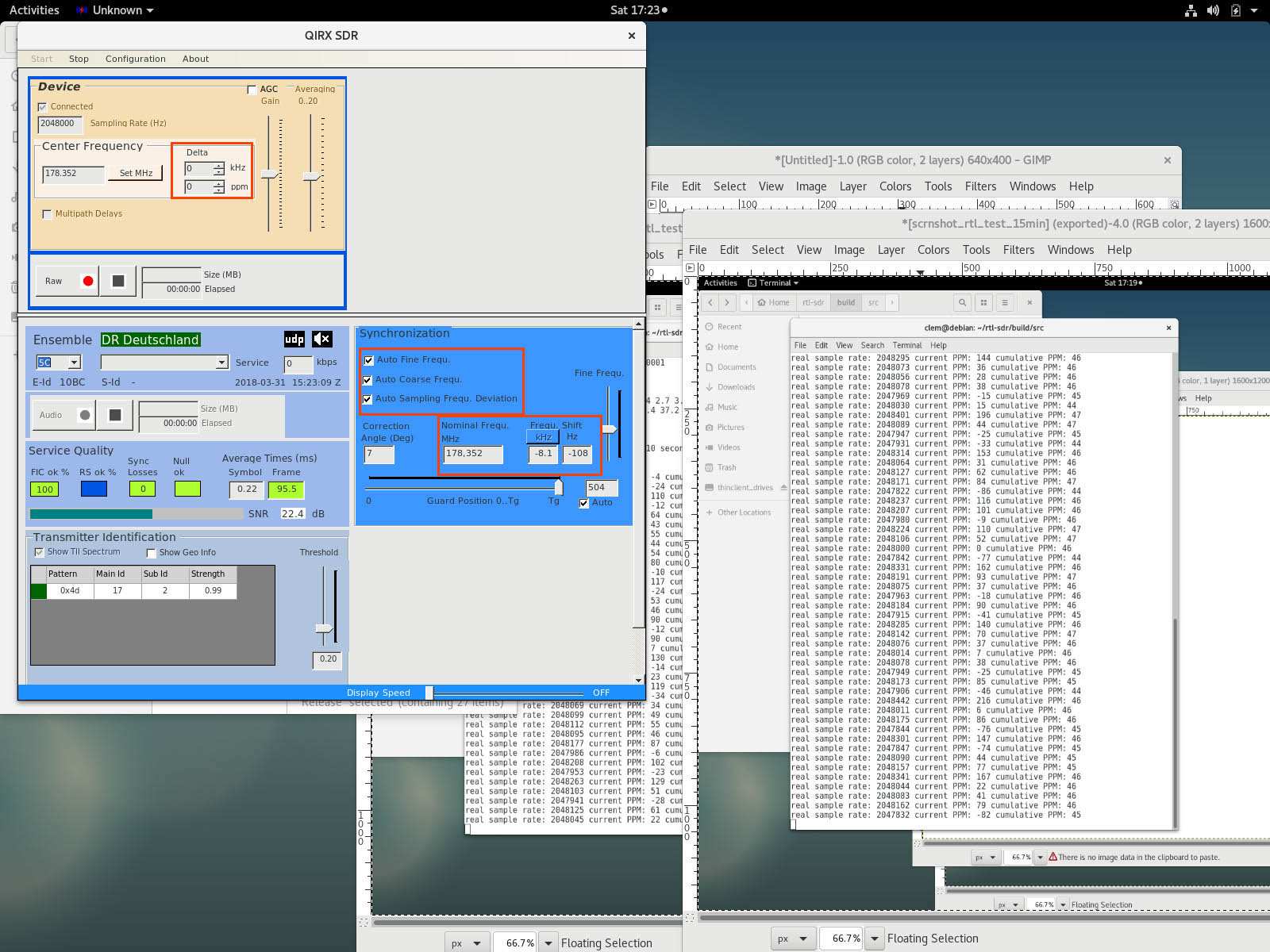

Step 1: Start QIRX

I made this measurement immediataely after the rtl_test measurement shown above (Linux necessary), therefore I worked with the Linux version of QIRX, without spectrum indications. The picture shows you the complete Gnome screenshot of Debian, on its right hand side the terminal window of the above discussed rtl_test measurement. Of course, the Windows version of QIRX would serve the purpose equally well.

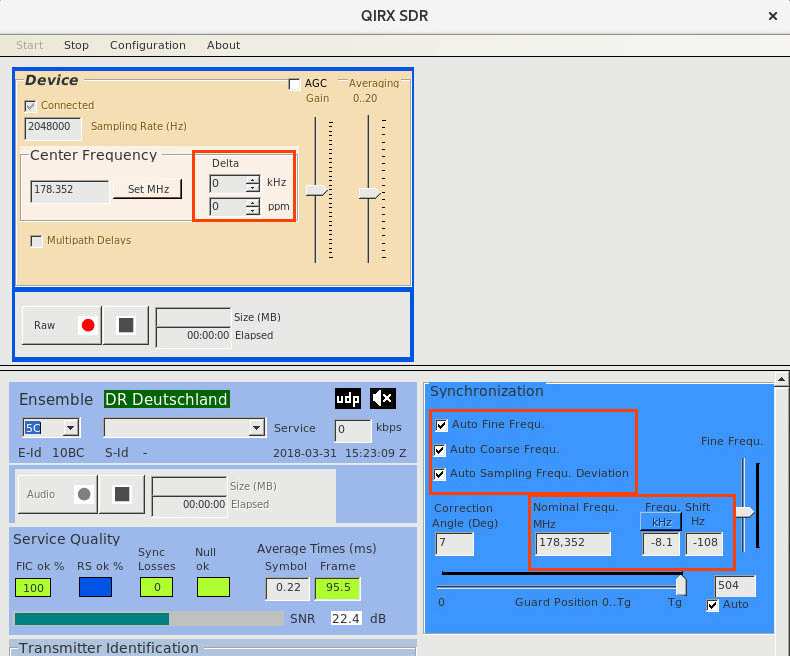

Reassure you that the "Delta" and "ppm" text fields in the "Device" box are both zero (upper red rectangle).

Reassure you that - as is usually the case - all three check boxes in the "Synchronization" box are checked (red rectangle in the middle).

The signal should be well stabilized and synchronized, with the green indicated lamps in "Service Quality". A service selection is not necessary for this measurement.

Step 2: Get the total frequency offset of your RTL-SDR

For better readability, I enlarge the QIRX window.

Add the two values "Frequ.Shift kHz" and "Freq.Shift Hz", with the correct signs. In our examples this will result in -8.1kHz-108Hz = -8208Hz. Invert the sign, giving you 8208Hz.

Step 3: Get the frequency error in ppm of your RTL-SDR

Now get your pocket calculator, divide 8208 by the "Nominal Frequ.MHz" value (next field to the left), after having the latter converted from MHz to Hz. In our example, this will result in 8208Hz / 178352000Hz = 46.0*10-6 = 46.0ppm.

Now scroll upwards on this page and compare with the value obtained by rtl_test. The coincidence couldn't be better. As a result, rtl_test is reliable (you allow me this little joke..). However, this perfect coincidence could have been by chance. Usually you can expect different methods to coincide within +- 2ppm, being already very good. But wait, we're not qute finished yet.

Step 4: Get your thermometer and read the room temperature value.

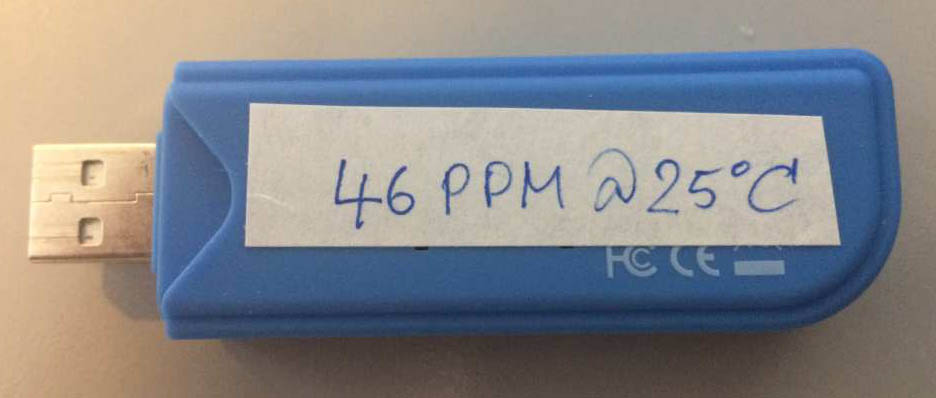

I had 25°C (sun was coming in at last).

Step 5: Get the sticker.

Label with: 46PPM@ 25°C, and put it on your RTL-SDR dongle. Calibrated!

-

Questions?

Of course! We could ask ourselves if we now can forget about expensive (ok, not so much any more) TCXO receivers, usually having a frequency accuracy of around 1ppm. TCXO means "Temperature Compensated Xtal Oscillator" and usually denotes a quartz oscillator containing a crystal cut in such a way that it is not much affected by temperature variations. The answer is "yes, but", the "but" translating to "only at the temperature of the measurement", being in reality a "No, we can't".

You seem to belong to the curious, because you are still here! You could ask, if it is possible in some way to check or even improve our measurement. The answer is a clear "Yes, of course we can!".

Let's take a break, before we continue with part II of our little excursion.

Remark

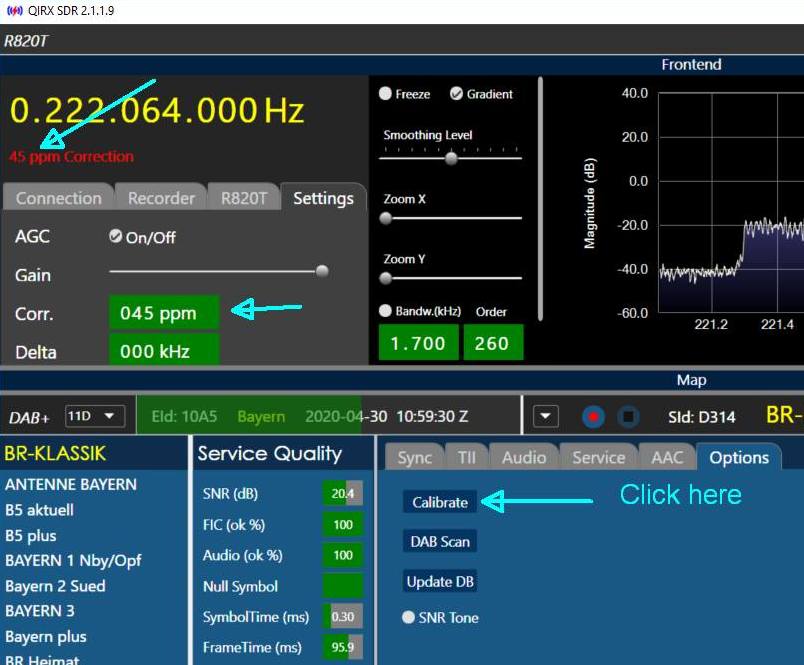

Since Version 2 of QIRX, you can calibrate your receiver with a single mouse click: Select the "Options" tab and click "Calibrate", as shown in this picture. From version 3.1.7, the calibration is performed with an accuracy of up to 1/100ppm, resulting e.g. with sdrplay RSP receivers in a frequency accuracy of about 1e-8.